ADAS Engineer: The Brains Behind Your Car's Safety Features

Sensor Technologies

Advanced Driver Assistance Systems (ADAS) heavily rely on a fusion of sensor technologies to perceive and interpret the vehicle's surroundings. These sensors act as the eyes and ears of ADAS, providing crucial data that enables features like adaptive cruise control, lane keeping assist, automatic emergency braking, and blind spot detection. Here's a breakdown of the key sensor technologies:

Cameras are cost-effective and offer high-resolution image data, making them suitable for object detection, lane recognition, and traffic sign recognition. They excel in good lighting conditions but can be challenged in low light or adverse weather.

Radar sensors use radio waves to detect objects and their distance, velocity, and direction, even in challenging weather conditions like fog, rain, or snow. They are crucial for adaptive cruise control and forward collision warning systems.

LiDAR (Light Detection and Ranging) uses laser beams to create a highly accurate 3D map of the vehicle's surroundings. It provides precise distance and depth information, making it ideal for object detection, avoidance maneuvers, and autonomous driving applications. However, LiDAR can be expensive and sensitive to weather conditions.

Ultrasonic sensors emit sound waves and measure the time it takes for the waves to bounce back from an object. They are commonly used in parking assistance systems and for detecting nearby objects at low speeds.

Driver monitoring systems use cameras and sensors to monitor the driver's attention and fatigue levels. They can detect drowsiness, distraction, and other potentially dangerous driving behaviors, providing alerts or even taking control of the vehicle if necessary.

The future of ADAS will likely involve a multi-sensor fusion approach, combining data from various sensors to create a comprehensive and reliable understanding of the driving environment. This sensor fusion allows for redundancy and improved accuracy, leading to safer and more sophisticated ADAS features. As ADAS technology advances, we can expect to see even more innovative uses of sensor technologies, paving the way for fully autonomous vehicles.

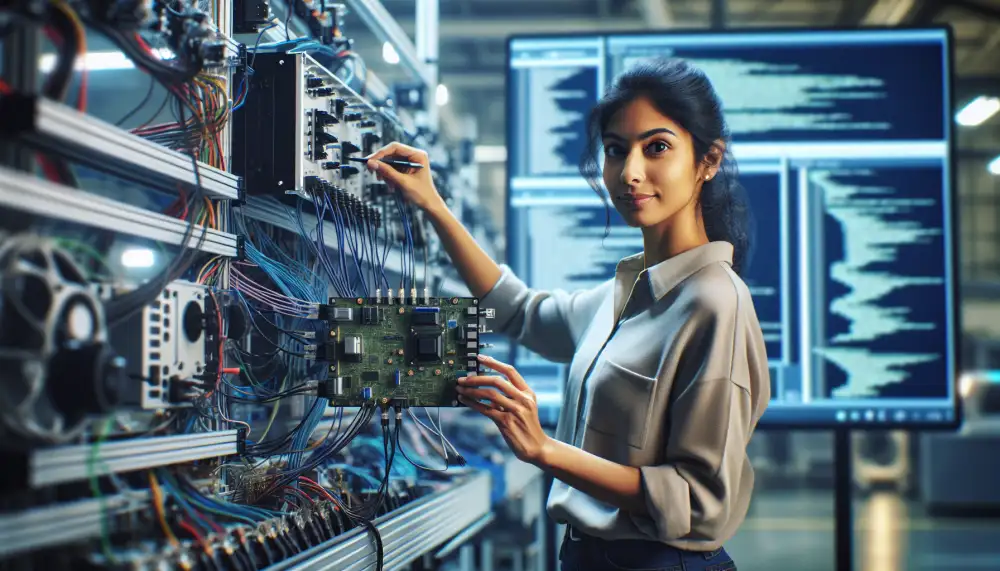

Computer Vision

Computer vision is like giving cars superpowers. It uses cameras and algorithms to help cars "see" and understand the world around them. For ADAS engineers, this is pure gold. It's the core technology behind many advanced driver-assistance systems. Think lane departure warning, automatic emergency braking, and adaptive cruise control.

Here's how it works: cameras capture images and videos of the car's surroundings. Then, sophisticated algorithms process this visual data, identifying objects like other vehicles, pedestrians, cyclists, and even traffic signs. This information is used to provide real-time alerts to the driver or even take control of the vehicle in critical situations, preventing accidents and making driving safer.

As an ADAS engineer, you're not just working with cool tech; you're shaping the future of mobility. With computer vision, you're developing systems that can potentially save lives and make our roads safer for everyone. It's a challenging field that requires a deep understanding of both software and automotive engineering. But the rewards, both in terms of career satisfaction and societal impact, are immense.

Sensor Fusion Algorithms

Sensor fusion algorithms are the brains behind modern ADAS, taking data from various sensors like radar, lidar, cameras, and ultrasonic sensors to create a comprehensive understanding of the vehicle's surroundings. This fusion process is crucial for overcoming the limitations of individual sensors and achieving robust and reliable ADAS features.

There are two main types of sensor fusion architectures: centralized and decentralized. Centralized fusion processes all sensor data in a central processing unit, allowing for sophisticated algorithms but demanding high computational power. Decentralized fusion distributes processing across multiple processing units, reducing the load on any single unit and improving system redundancy.

Common sensor fusion algorithms used in ADAS include Kalman filters, particle filters, and Bayesian networks. Kalman filters are well-suited for tracking objects over time, predicting their future positions based on past measurements and system dynamics. Particle filters excel in handling non-linear systems and uncertainties, making them suitable for complex driving scenarios. Bayesian networks provide a probabilistic framework for reasoning about the environment, inferring the most likely state of the world based on sensor data and prior knowledge.

The choice of sensor fusion algorithms depends on the specific ADAS feature, computational resources, and desired performance level. For instance, adaptive cruise control might rely on simpler algorithms like Kalman filters, while automated emergency braking systems may require more sophisticated approaches like particle filters or Bayesian networks.

As ADAS technology advances towards higher levels of automation, the role of sensor fusion algorithms will become even more critical. Developing robust, reliable, and computationally efficient sensor fusion algorithms is essential for ensuring the safety and effectiveness of future ADAS and autonomous driving systems.

Machine Learning in ADAS

Machine learning (ML) is revolutionizing Advanced Driver-Assistance Systems (ADAS) by enabling vehicles to perceive, predict, and respond to complex driving scenarios. ML algorithms excel at processing vast amounts of data from various sensors like cameras, radar, and lidar. This data-driven approach allows ADAS to accurately perceive the environment, including object detection, lane keeping, and adaptive cruise control. By leveraging ML, ADAS can learn from real-world driving data and improve their performance over time. For instance, ML algorithms can be trained on massive datasets of driving scenarios to identify patterns and predict potential hazards. This predictive capability enhances features like automatic emergency braking and lane departure warning by enabling the system to anticipate and react to dangerous situations more effectively.

| Feature | ADAS Engineer | Software Engineer |

|---|---|---|

| Domain Expertise | Automotive Systems, Robotics, Computer Vision | Software Development, Algorithms, Data Structures |

| Tools & Technologies | MATLAB, Simulink, C/C++, Python, ROS | Java, Python, C++, JavaScript, Databases |

| Industry Focus | Automotive, Robotics | Varied (Tech, Finance, Healthcare, etc.) |

The use of ML in ADAS significantly improves road safety and enhances the driving experience. By automating tasks and providing timely warnings, ML-powered ADAS reduce driver workload and mitigate human error. As ML technology advances, we can expect even more sophisticated ADAS features, such as automated parking, traffic jam assist, and ultimately, fully autonomous driving capabilities. However, challenges remain in ensuring the reliability, safety, and ethical considerations of ML-based ADAS. Addressing these challenges through rigorous testing, validation, and ongoing research is crucial for the widespread adoption and societal acceptance of ML-powered ADAS.

Path Planning and Control

Path planning and control are crucial aspects of Advanced Driver Assistance Systems (ADAS). Path planning involves determining the optimal trajectory for the vehicle to follow, considering factors like road geometry, obstacles, traffic rules, and the driver's intentions. Control refers to the algorithms and actuators that execute the planned path, ensuring the vehicle stays on course while maintaining stability and comfort.

Different path planning algorithms are used in ADAS, each with its strengths and weaknesses. Some common approaches include graph search algorithms, potential field methods, and sampling-based planners. Graph search algorithms, like Dijkstra's algorithm and A*, treat the environment as a network of interconnected nodes and find the shortest or least costly path. Potential field methods assign repulsive forces to obstacles and attractive forces to the goal, guiding the vehicle along the resulting potential gradient. Sampling-based planners, such as Rapidly-exploring Random Trees (RRT) and Probabilistic Roadmaps (PRM), randomly sample the environment to build a roadmap of feasible paths and then select the best one.

Control algorithms work in conjunction with path planning to ensure the vehicle accurately follows the desired trajectory. These algorithms use feedback from sensors like cameras, radar, and lidar to adjust steering, acceleration, and braking in real-time. Model-predictive control (MPC) is a popular control strategy in ADAS that predicts the vehicle's future states and optimizes control inputs over a finite time horizon.

The development and implementation of robust path planning and control algorithms are essential for achieving safe and reliable ADAS. These systems must be able to handle complex and dynamic driving environments, make real-time decisions, and interact seamlessly with the driver. As ADAS technology advances towards higher levels of autonomy, the importance of sophisticated path planning and control will only continue to grow.

Vehicle-to-Everything Communication

Vehicle-to-everything (V2X) communication is rapidly emerging as a game-changer for ADAS and the future of automated driving. Imagine a world where your car can talk not only to other vehicles but also to infrastructure, pedestrians, and the cloud. That's the promise of V2X. For ADAS engineers, this technology opens up a whole new dimension of possibilities. By leveraging V2X, ADAS can overcome limitations of onboard sensors like radar and cameras, which are restricted by line-of-sight issues. V2X allows vehicles to "see" around corners, receive warnings about hazards beyond the driver's field of view, and even anticipate the actions of other road users. This enhanced situational awareness can significantly improve the effectiveness of existing ADAS features like adaptive cruise control, lane keeping assist, and automatic emergency braking.

Two main technologies are vying for dominance in the V2X space: Dedicated Short-Range Communication (DSRC) and Cellular Vehicle-to-Everything (C-V2X). DSRC has been around longer and is based on a dedicated radio frequency spectrum. C-V2X, on the other hand, leverages existing cellular networks, offering potential advantages in terms of infrastructure costs and wider adoption. As an ADAS engineer, understanding the nuances of both technologies and their implications for system design and integration is crucial. V2X is not without its challenges. Data security and privacy concerns are paramount, as is the need for robust standardization and interoperability between different V2X systems. However, the potential benefits of V2X for enhancing road safety, improving traffic flow, and paving the way for higher levels of automation are undeniable. ADAS engineers have a key role to play in shaping the future of this exciting technology.

Functional Safety and Validation

Functional safety in ADAS is all about preventing hazards and minimizing risks associated with these sophisticated systems. Think of it as a safety net that ensures ADAS features operate safely and predictably, even when things go wrong. This field is super important for ADAS engineers because it directly impacts the well-being of drivers and everyone else on the road.

Validation is the rigorous process of making sure that ADAS systems actually meet those stringent safety requirements. It's like putting these systems through a tough obstacle course to ensure they can handle any curveballs thrown their way. This involves a whole lot of testing, both in simulated environments and real-world scenarios, to identify and address potential issues before these systems hit the road.

ADAS engineers use a bunch of cool tools and techniques to ensure functional safety. One of the key frameworks is something called ISO 26262, a standard specifically designed for the functional safety of electrical and electronic systems in road vehicles. It provides a systematic approach to identify hazards, assess risks, and design safety mechanisms throughout the entire lifecycle of ADAS development.

Think of things like sensors, software, actuators, and all the complex algorithms that make ADAS tick. These components need to be designed and tested with safety in mind. For instance, engineers might use techniques like fault injection to simulate component failures and see how the system responds. The goal is to ensure that even if one part fails, the system can still operate safely or transition into a safe state.

But it's not just about testing the individual pieces. ADAS engineers also need to validate how these systems perform as a whole, especially when interacting with other vehicle systems. This is where things get really interesting, as it involves testing in complex, real-world environments to account for all sorts of variables like weather, traffic, and unexpected pedestrian behavior.

The world of ADAS functional safety and validation is constantly evolving. As these systems become more advanced and autonomous, the challenges and complexities grow too. But one thing remains constant: the paramount importance of ensuring safety on our roads. And that's where the expertise of ADAS engineers, armed with their knowledge of functional safety and rigorous validation techniques, becomes absolutely crucial. They are the guardians of safety, working tirelessly behind the scenes to make sure that these advanced systems live up to their promise of making our roads safer for everyone.

Emerging Trends in ADAS Technology

The landscape of Advanced Driver Assistance Systems (ADAS) is rapidly evolving, driven by advancements in sensor technology, artificial intelligence, and connectivity. One prominent trend is the shift towards higher levels of autonomy. ADAS systems are moving beyond basic functionalities like lane keeping and adaptive cruise control, paving the way for Level 3 (conditional automation) and Level 4 (high automation) vehicles. This transition demands more sophisticated sensor fusion techniques, robust perception algorithms, and enhanced decision-making capabilities. Another key trend is the integration of artificial intelligence (AI) and machine learning (ML). AI-powered ADAS systems can learn from vast amounts of driving data, improving their ability to perceive the environment, predict potential hazards, and make informed decisions. Deep learning algorithms are being employed for object detection, classification, and trajectory prediction, enhancing the accuracy and reliability of ADAS features.

Furthermore, the increasing connectivity of vehicles is opening up new possibilities for ADAS. Vehicle-to-everything (V2X) communication enables cars to exchange real-time data with each other, infrastructure, and the cloud. This connectivity facilitates cooperative driving, enhances situational awareness, and enables advanced safety features like intersection assistance and hazard warnings. The development of 5G and edge computing technologies is further accelerating the potential of V2X communication for ADAS applications. As ADAS technology continues to advance, ensuring system safety and reliability remains paramount. Engineers are focusing on developing robust testing and validation methods, including simulation environments and closed-track testing, to rigorously evaluate ADAS performance under various driving conditions. Additionally, cybersecurity is a growing concern, as connected ADAS systems are susceptible to potential cyberattacks. Therefore, robust cybersecurity measures are crucial to protect against unauthorized access and ensure the integrity of ADAS functionalities.

Ethical Considerations

As ADAS technology progresses, engineers face complex ethical dilemmas with significant societal impact. Balancing innovation with safety is paramount. Rigorous testing and validation procedures are crucial to ensure system reliability and minimize the risk of accidents. However, real-world scenarios are diverse and unpredictable, demanding constant improvement and updates.

Transparency is key. Users must understand the capabilities and limitations of ADAS features to use them responsibly. Clear communication about system functionality, including potential failure modes, is essential to prevent overreliance and misuse. The issue of liability in accidents involving ADAS remains a complex challenge. Determining fault when both human drivers and automated systems are involved requires careful consideration of system design, driver actions, and the distribution of responsibility.

Data privacy is another critical concern. ADAS systems collect vast amounts of data about driving habits, location, and vehicle performance. Engineers must prioritize data security and user privacy, ensuring that data is collected, stored, and used responsibly and ethically. Furthermore, engineers must consider the potential for bias in ADAS algorithms. Systems trained on biased data sets may perpetuate or even amplify existing societal inequalities. It is crucial to develop inclusive and equitable ADAS technology that benefits all users.

The ethical considerations surrounding ADAS are multifaceted and constantly evolving. Engineers must engage in ongoing dialogue with ethicists, policymakers, and the public to address these challenges responsibly. By prioritizing safety, transparency, privacy, and fairness, engineers can help shape the future of transportation in a way that benefits all of society.

An ADAS engineer is a modern-day alchemist, transforming lines of code and complex algorithms into tangible safety, efficiency, and comfort on the roads.

Blake Sterling

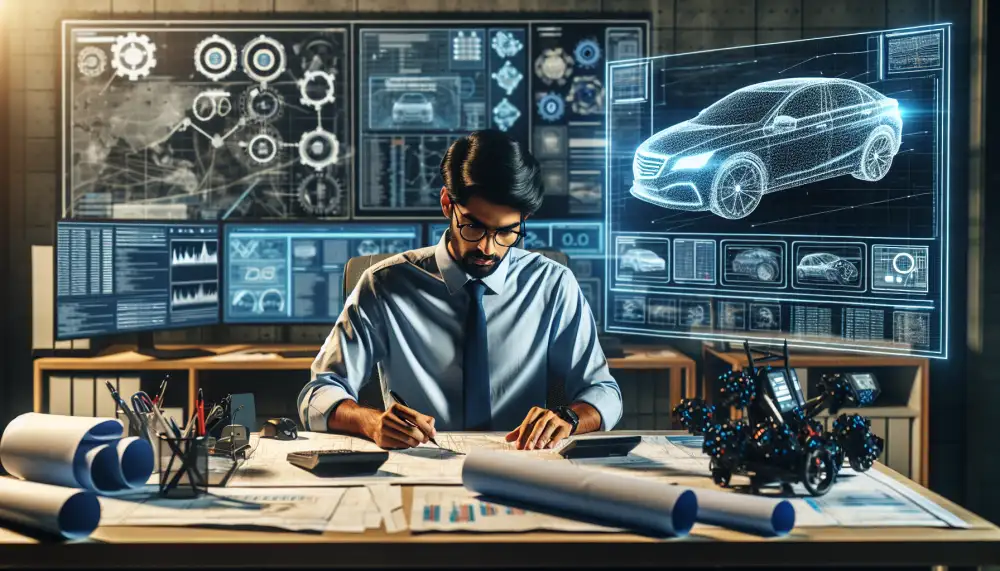

Future of ADAS Engineering

The automotive industry is undergoing a revolutionary transformation, with Advanced Driver-Assistance Systems (ADAS) at the forefront. As we look ahead, the future of ADAS engineering promises exciting advancements and challenges.

One significant trend is the development of more sophisticated sensors and perception algorithms. Expect to see higher-resolution cameras, lidar systems with extended ranges, and radar technology capable of penetrating challenging weather conditions. These advancements will enable ADAS to perceive the environment with greater accuracy and detail.

The increasing computational power and artificial intelligence (AI) are playing a pivotal role. ADAS will leverage machine learning algorithms to analyze sensor data, predict potential hazards, and make real-time decisions. This will lead to more robust and reliable ADAS features, enhancing safety and driving experience.

Connectivity will be crucial for the future of ADAS. Vehicle-to-Everything (V2X) communication will allow cars to share information with each other, infrastructure, and the cloud. This interconnectedness will enable cooperative driving, improve traffic flow, and enhance overall road safety.

As ADAS technology advances, so too will the need for skilled engineers. The future demands engineers with expertise in sensor fusion, AI, machine learning, and software development. Collaboration between automotive engineers and software developers will be essential to integrate complex systems seamlessly.

The ethical and regulatory landscape surrounding ADAS is also evolving. As these systems become more autonomous, questions about liability, data privacy, and cybersecurity will need careful consideration. ADAS engineers will play a crucial role in ensuring these systems are developed and deployed responsibly.

The future of ADAS engineering is bright, with advancements poised to transform the automotive industry. As we move towards an era of increasingly automated vehicles, the expertise and innovation of ADAS engineers will be paramount in shaping the future of mobility.

Published: 01. 07. 2024

Category: Food